Community Canvas

| Project Goal

As a Living Harmony Track entry in the MIT Reality Hack, we aimed to build an AR/XR product using Snapdragon Spaces in 2.5 days.

As a Living Harmony Track entry in the MIT Reality Hack, we aimed to build an AR/XR product using Snapdragon Spaces in 2.5 days.

| Project Outcome

An XR app/web-platform that transform community design through engaging and immersive experiences and empower individual to actively shape their surroudings by uniting physical and virtual realms.

An XR app/web-platform that transform community design through engaging and immersive experiences and empower individual to actively shape their surroudings by uniting physical and virtual realms.

Most features were developed within 2.5 days.

Prize

MIT Reality Hackathon 2024 Finalist (12/96)

Best LeiaSR Application

Best Use of Snapdragon Spaces

MIT Reality Hackathon 2024 Finalist (12/96)

Best LeiaSR Application

Best Use of Snapdragon Spaces

Role

Unity Programmer, in Collaboration with Mashiyat Zaman, Peixuan(Lily) Yu, Tom Xia, Yidan Hu

Unity Programmer, in Collaboration with Mashiyat Zaman, Peixuan(Lily) Yu, Tom Xia, Yidan Hu

Toolkit

Unity(C#) | Snapdragon Space Glasses & SDK | Figma | LeiaSR | Meshy AI | User Research | User Interview

Unity(C#) | Snapdragon Space Glasses & SDK | Figma | LeiaSR | Meshy AI | User Research | User Interview

Exhibition

MIT Reality Hackathon

XR Guild NYC

MIT Reality Hackathon

XR Guild NYC

02. Design

03. Technical Development

04. Latest Progress

01. Research

01-1 Problem Identifying [at the Hackathon]

Given the theme of “living-harmony”, we brainstormed around a few keywords: urban-symbiosis, crowd-sourcing, and transportation.

Given the context of using AR/XR as the medium, we come up with possible use case scenario we are interested in: stage design, crowd-souring in a community environment, subway-entertainment design. After a second round of brainstorm, we came up with a problem we are all concerned with:

How can we use AR to facilitate collective community space building?

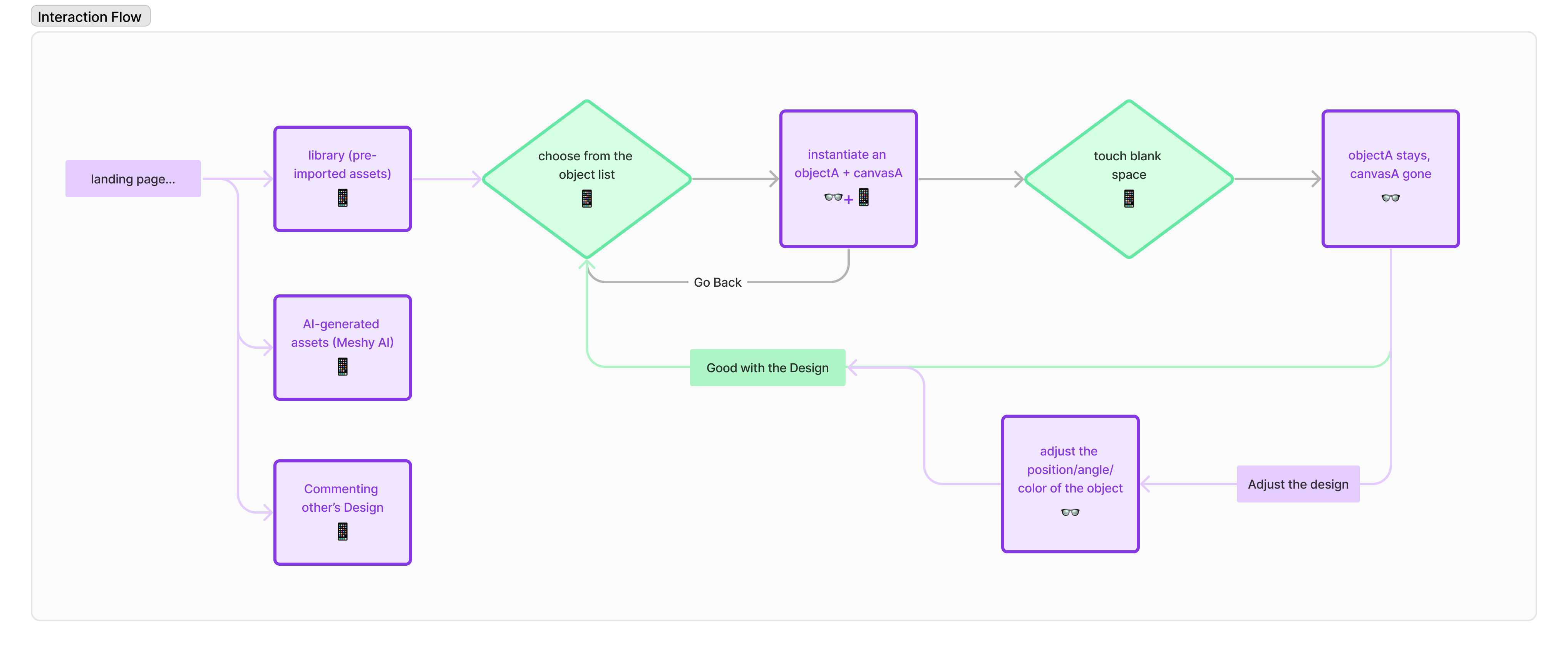

Targeting the residents in the community, we want to leverage AR tech to help them collectively design the community space. More than that, we would like community members being able to share the memory of the space / the community together. Based on these needs, we came up with the first-round of user-flow.

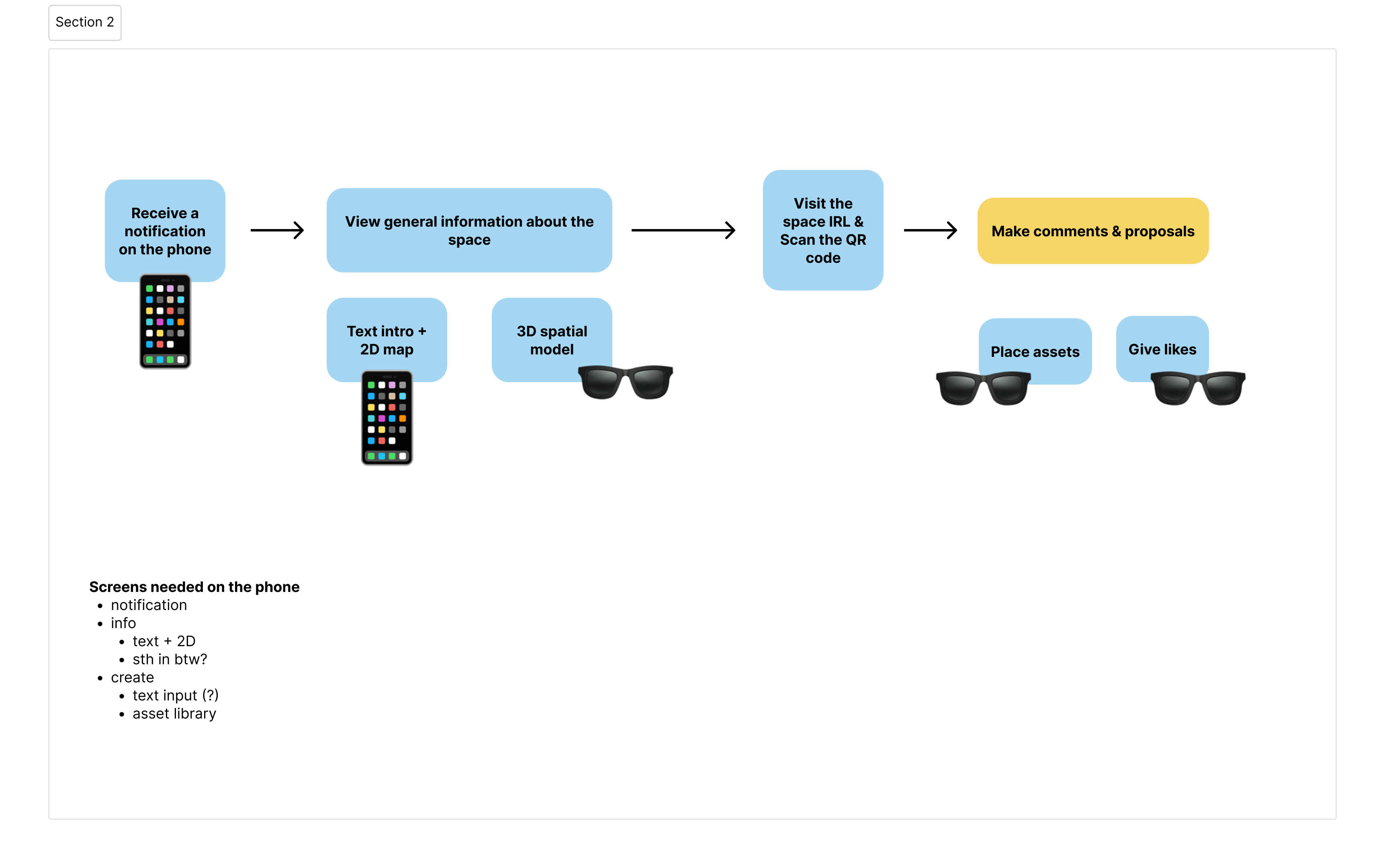

01-2 User Flow [at the Hackathon]

To better understand the question, we interviewed one groupmate’s connection who works as a landscape architecture and used to work for non-protif organization on community space planning and design.

From her, we figured out the lacking elements of our current user flow and then discussed a more clear and focused version, as shown below. She also introduced us NYC’s Participatory Budgeting, which is a democratic process in which community members directly decide how to spend part of a public budget. Participartory Budgeting also became our future direction to work on after the Hackathon.

From her, we figured out the lacking elements of our current user flow and then discussed a more clear and focused version, as shown below. She also introduced us NYC’s Participatory Budgeting, which is a democratic process in which community members directly decide how to spend part of a public budget. Participartory Budgeting also became our future direction to work on after the Hackathon.

Interviewing the Landscape Architect

Updated User Flow:

01-3 Problem Identifying

With the positive feedback we have received at MIT Reality Hack, we decided to go further with the project as we are interested to see its potential. First step is talk to people, so we research about NYC’s PB process and generated a list of possible contacts. Meanwhile, we brought our AR project to multiple XR exhibitions/gatherings and tested with our potential end-users. During the span of two weeks, we reached out to 40+ nyc council members, interviewed 5 of them; talked to 10+ people from the XR industry, and have 7 NYC residents user tested our application.

Contact List for Outreaching (Blurred)

01-4 Insights

We gained a lot of valuable insights from our interview. From the conversations with council members, we learned thorougly about the PB process:

![]()

Updated User Journey

We gained a lot of valuable insights from our interview. From the conversations with council members, we learned thorougly about the PB process:

- PB Process is optional for every district, mainly aiming for building connections with the citizen. Some newly appointed council members won’t do this because of time/resource limitation.

- For District 37, it’s their second year and they are expecting a huge number increase (75 → 300+ submissions)

- Most (80%) PB ideas are submitted online through the map. Physically submitted ideas will be uploaded to the map by staff too.

- Multiple language options for the map, but didn’t talk about digital accessibility

- The process of choosing final PB projects to be implemented is:

- first round of voting → volunteers sorting ideas → present to budget delegates to generate the final ballot → voting again (e.g. choose 5 out of 6). The whole process will happen twice to give residents multiple chances.

- the financial feasibility is a more important factor compared to how popular the idea is

- Since it’s the second year of PB, the council is doing a lot of education for people on the general process and what a possible PB project might look like

- The district do a lot of outreaching like holding workshops at schools, partnering with other districts, hosting town halls, and using visual tools like videos to demonstrate project ideas

- visual tools help a lot!

- The district do a lot of outreaching like holding workshops at schools, partnering with other districts, hosting town halls, and using visual tools like videos to demonstrate project ideas

For the user test conducted by my groupmate Mashiyat, here’s our find-out:

Based all the feedback & insights, we updated the user flow and potential places where we could user AR. Still in progress.

- Most people didn't feel like there was a significant change in the experience using the glasses. One person actually preferred the glasses, though ("I have enough screens in my life"), while another said they might be more useful in a communal setting.

- Most people understood the motivation behind the process, i.e., adding more ways of engaging in PB.

- Some people expressed that the phone version is more accessible.

- One person explained that the phone and glasses essentially serve different purposes.

- Map view in phone version should indicate where you are.

Based all the feedback & insights, we updated the user flow and potential places where we could user AR. Still in progress.

Updated User Journey

02. Design

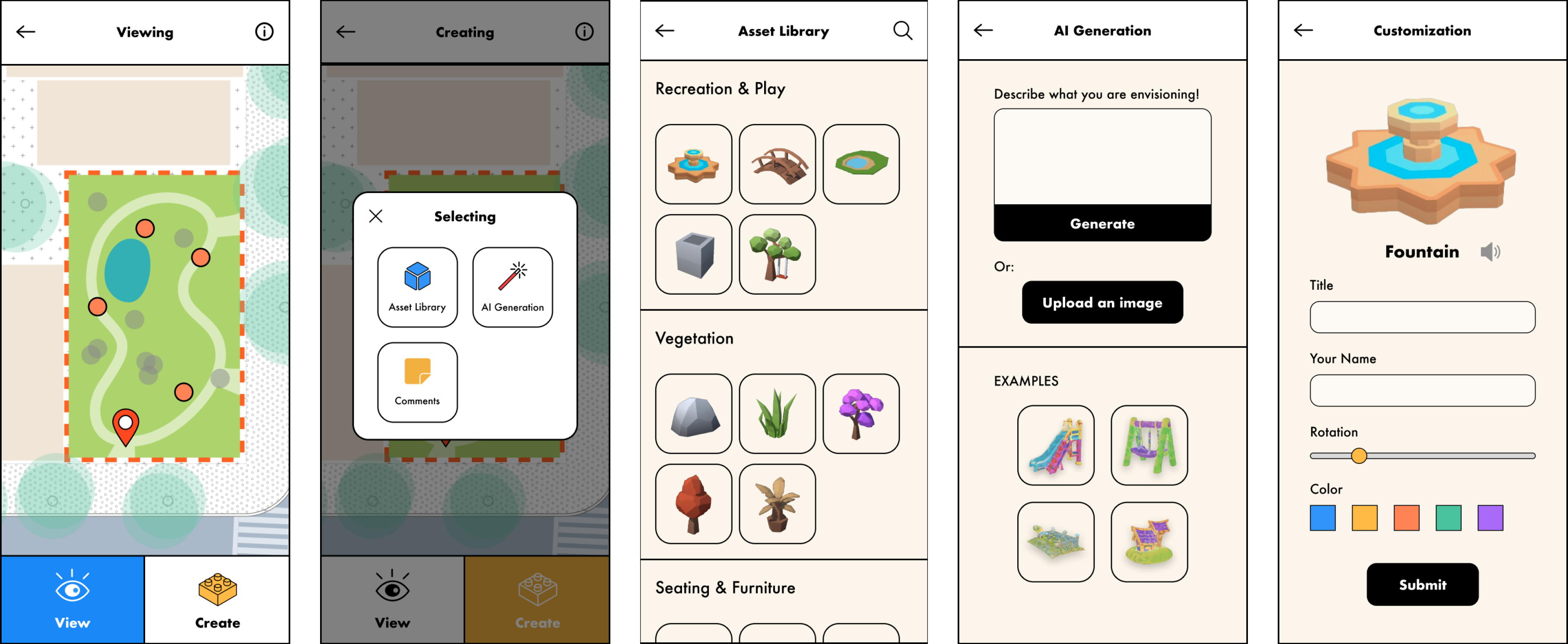

The App-end design was mainly completed by Lily and Tom. Through the process, we kept communicating with each other about the technical feasibility and possible features.

02-1 Visual References

02-2 UI Design

![]()

03. Technical Development

03-1 Development [at the Hackathon]

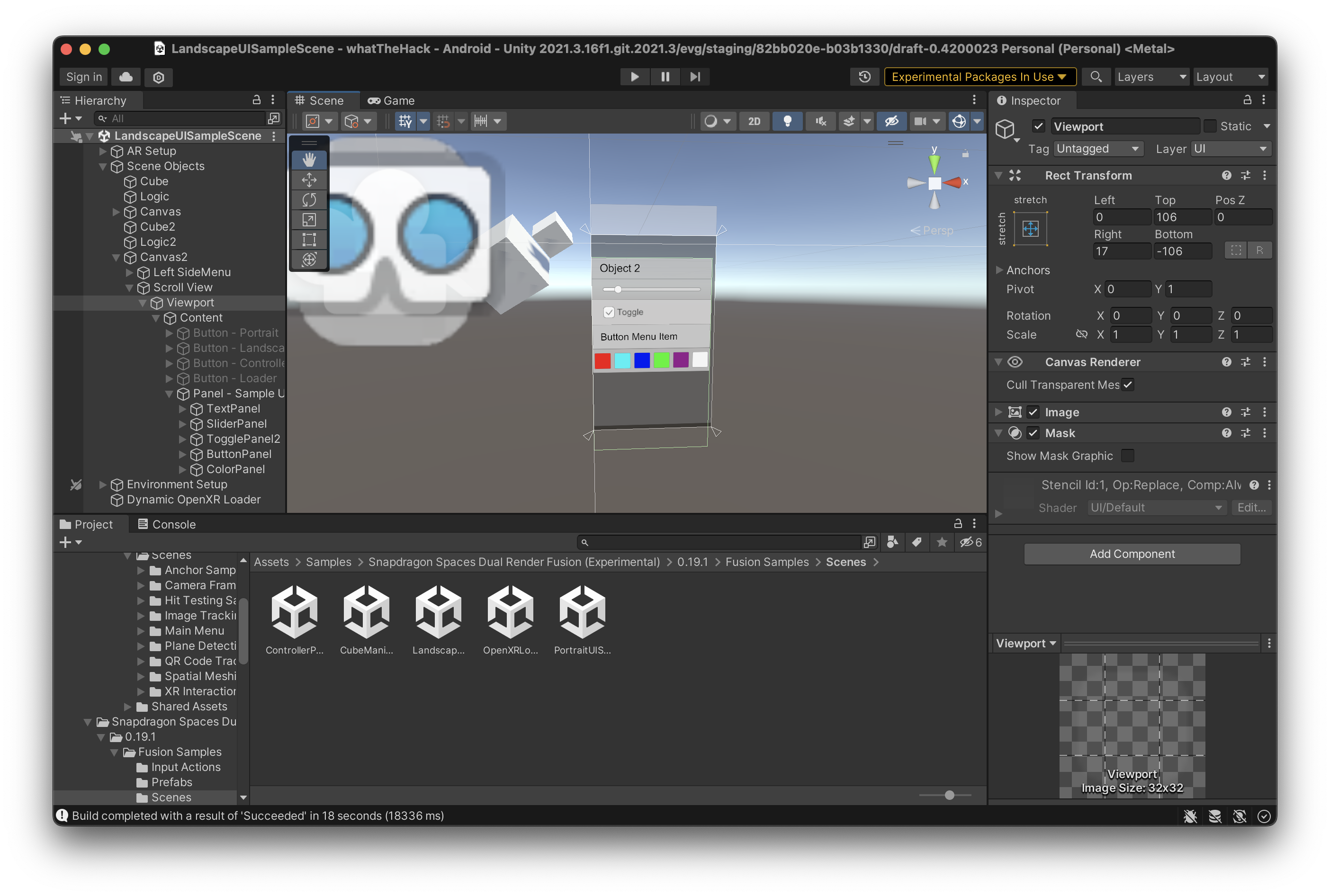

Developing at the Hackathon was challenging. Due to the time limitation, the development and design are almost synchronized. We configured the environment and the use of SnapDragon Space’s Dual Render Fusion SDK, which provides examples and references that formed the basis we can work on.

We utilized the sample scene with existing UI and developed based on that. One challenge we have run into is to build the bridge between the device and Unity to smoothen the workflow. Due to the specificity of the equipment, the staff at Snapdragon Space suggested that we use ADB (Android Debug Bridge), allowing us to test changes without plugging in our phone. Despite sluggish internet speeds due to the high volume of participants at the hackathon, we were able to iterate on our app more efficiently.

Developing at the Hackathon was challenging. Due to the time limitation, the development and design are almost synchronized. We configured the environment and the use of SnapDragon Space’s Dual Render Fusion SDK, which provides examples and references that formed the basis we can work on.

We utilized the sample scene with existing UI and developed based on that. One challenge we have run into is to build the bridge between the device and Unity to smoothen the workflow. Due to the specificity of the equipment, the staff at Snapdragon Space suggested that we use ADB (Android Debug Bridge), allowing us to test changes without plugging in our phone. Despite sluggish internet speeds due to the high volume of participants at the hackathon, we were able to iterate on our app more efficiently.

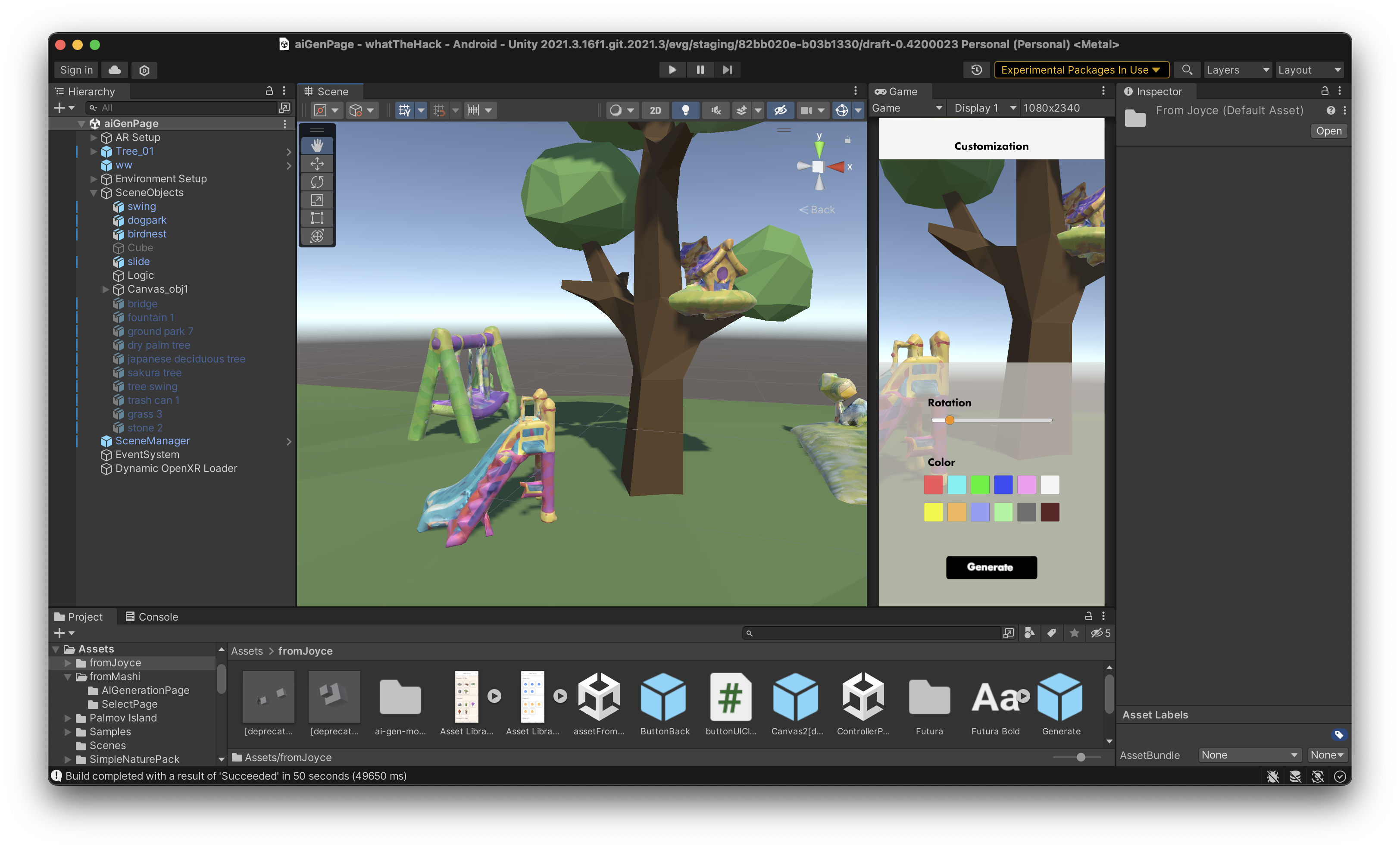

The prototype we were working on during the Hackathon was to show people in the goggles a visualization of what the co-desigined space will be like thorugh interfaces on the phone.

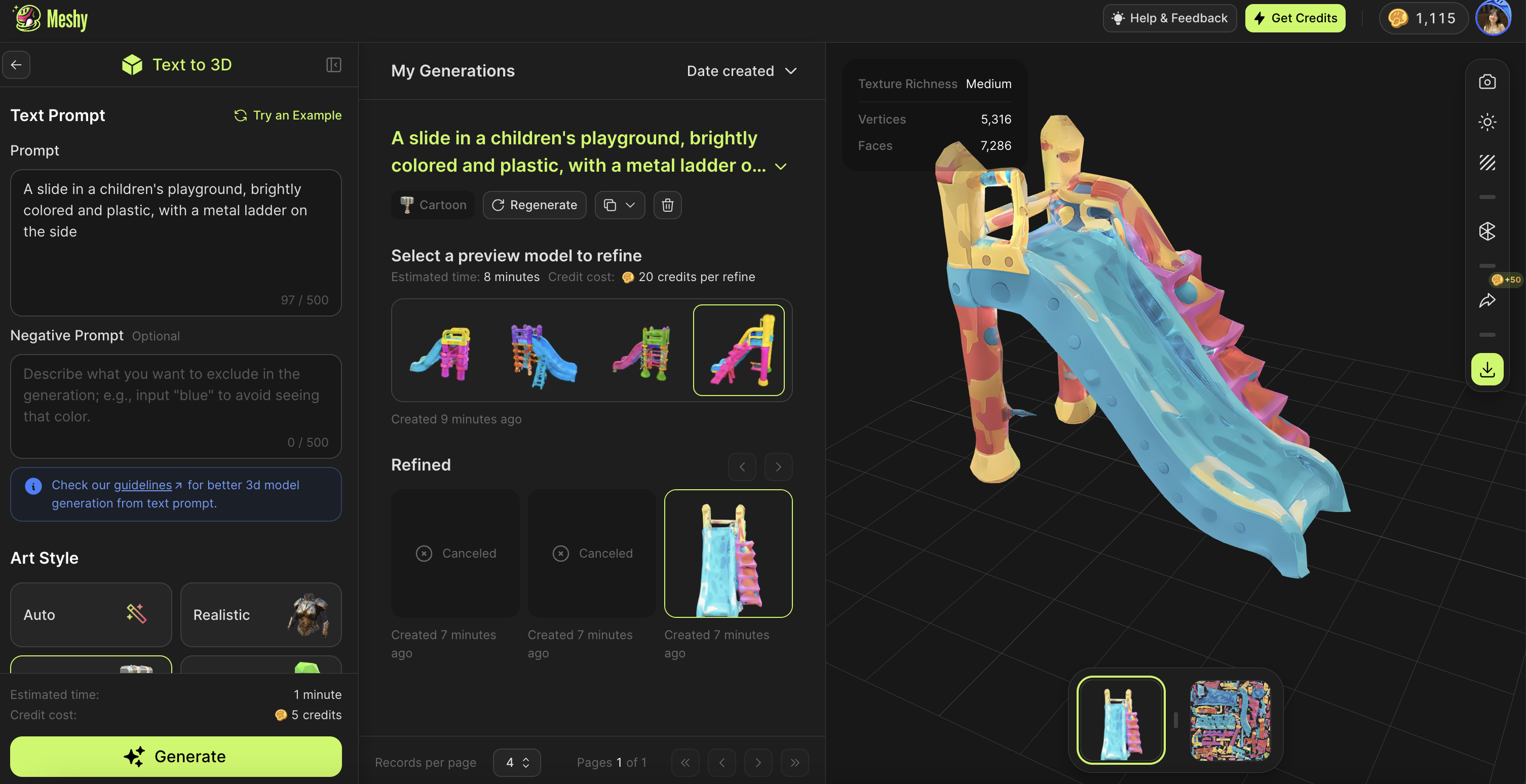

The flow we completed was the library one, while we also imported a few assets generated through Meshy AI. So far we are working on the AI-generated and Commenting (shared experience) feature. Through programming, we integrated the models and the interfaces designed by other groupmates into the unity program, which eventually could be run on the Lenovo Phone & the glasses. By adjusting the objects through their phone control, users can see the models they are satisfied in the space through AR.

Meshy AI

Unity Screenshot (scene / phone view)

03-2 Development [on-going]

A. Customized model for users to fetch

![]()

A. Customized model for users to fetch

Our current development focus on realizing the real-time AI model generating in Unity. We discovered Meshy’s API and Stability API, and after a lot of programming & debugging & researching, we realized that users won’t be patient enough to wait for real-time generating in the space, especially given the context they aren’t informed that “I’m using AI to purposely generate a model” at the moment. Therefore, we steer to Sketchfab - where users can search in keywords in realtime and get the model according to the input, which is more ideal.

︎︎︎ the model user will get by inputting “humanskeleton”

︎︎︎ the model user will get by inputting “humanskeleton”

B. Geo-spatial sharing

As a part of the sharing feature, we want to anchor the AR pieces somewhere on this globe (in new york city). We would like people to come to the sites IRL, and see other community member’s creation. To realize this, we decided to use some geospatial feature. At first we tested with Google’s geospatial API. It’s stunning to see the whole world being rendered in Unity, however, due to some unknown reasons we weren’t able to build it. We switched to the AR + GPS feature from Unity Asset store, and we successfully saw the object in AR right next to us.

04. Latest Progress

After evaluating the accessbility and technical elements, we decided to turn the project into web-based. Mashiyat Zaman led the main development. Check it out:

︎︎︎[Prev]

Thank you for reading 🛝